Building Smarter LLM Applications with DSPy

In today’s AI-driven world, the demand for applications powered by Large Language Models (LLMs) has exploded. However, while the capabilities of LLMs are extraordinary, building reliable, scalable, and efficient LLM applications remains a major challenge. This is where DSPy comes in — an open-source framework that redefines how developers build and optimize LLM-powered systems.

Instead of relying on fragile prompts or endless trial and error, DSPy empowers developers to build smarter applications through a structured, modular, and optimization-first approach.

What is DSPy?

DSPy is a declarative framework designed to make LLM development more reliable, scalable, and maintainable. Rather than manually writing prompts for every task, DSPy lets you declare what you want, and then it automatically finds the best way to achieve it — optimizing prompts, selecting examples, tuning model calls, and more.

Think of DSPy as the “software engineering toolkit” for LLMs: it lets you build components, connect them, optimize them, and manage them systematically — much like how you build traditional software applications.

Why use DSPy?

Here’s why DSPy is making waves in the AI development community:

- Prompt engineering automation: Instead of manually crafting and tweaking prompts, DSPy optimizes prompts for you.

- Reusability and modularity: Create small components (modules) that can be reused across different applications.

- Portability across models: Build once, and run your application across different LLMs (e.g., OpenAI, Anthropic, Hugging Face) with minimal changes.

- Optimization for real goals: DSPy can automatically find better prompts, examples, and hyperparameters to maximize real-world performance metrics.

- Structured workflows: Chain multiple modules together into complex workflows — without losing clarity or control.

How DSPy works

At the heart of DSPy are two key concepts:

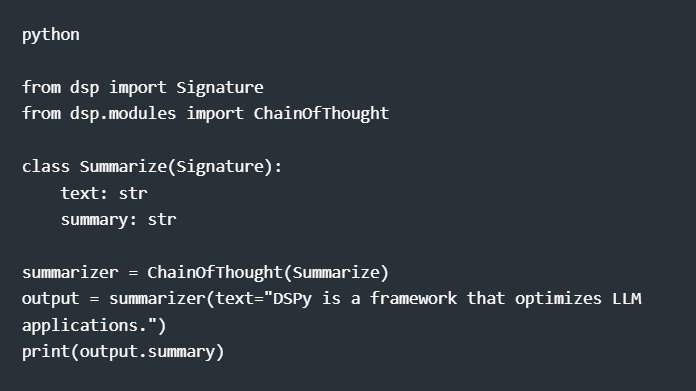

- Signatures: Signatures define what you expect: inputs, outputs, and behavior. Example: Summarize a paragraph into a short sentence.

- Modules: Modules define how the behavior is implemented, using LLM calls, optimization techniques, and composition.

This separation allows you to focus on desired outcomes, while DSPy figures out the best way to get there.

A simple example flow:

Behind the scenes, DSPy will optimize how the summarization prompt is structured, possibly improve it with few-shot examples, and manage LLM calls more efficiently.

Key features that make DSPy stand out

- Automatic few-shot example selection: Find the best examples dynamically to improve model outputs.

- Built-in optimization engines: Use strategies like grid search, evolutionary search, and others to automatically tune applications.

- Multi-model support: Compatible with many LLM providers and local models.

- Composable pipelines: Build complex applications by linking modules together.

- Open and extensible: As an open-source project, DSPy is continuously evolving with community contributions.

Example use cases

- Intelligent agents – Create agents that can reason, plan, and solve tasks through multi-step workflows, all managed modularly.

- Enhanced retrieval-augmented generation (RAG) – Optimize how queries are generated, how documents are retrieved, and how final answers are synthesized.

- Custom chatbots – Develop domain-specific conversational agents that can adapt and optimize without fragile hardcoded prompts.

- Automated content creation – Create content pipelines that automatically adjust tone, structure, and style based on the target audience.

Getting started with DSPy

You can install DSPy with a simple command:

DSPy’s documentation and community are active, offering quick-start guides, examples, and optimization recipes for developers at all levels.

The future of LLM application development

Just like frameworks such as TensorFlow revolutionized deep learning, DSPy has the potential to redefine how we program with language models. By focusing on abstraction, optimization, and modularity, DSPy enables developers to build smarter, more robust LLM applications — without getting bogged down in prompt engineering minutiae.

In a future where AI applications are everywhere, tools like DSPy will be critical for turning raw model power into real-world impact.

Final thought:

If you’re building LLM applications and want them to be smarter, more reliable, and easier to manage, it’s time to explore DSPy. The future of AI development isn’t just about bigger models — it’s about building better systems.

Our services:

- Staffing: Contract, contract-to-hire, direct hire, remote global hiring, SOW projects, and managed services.

- Remote hiring: Hire full-time IT professionals from our India-based talent network.

- Custom software development: Web/Mobile Development, UI/UX Design, QA & Automation, API Integration, DevOps, and Product Development.

Our products:

- ZenBasket: A customizable ecommerce platform.

- Zenyo payroll: Automated payroll processing for India.

- Zenyo workforce: Streamlined HR and productivity tools.

Services

Send Us Email

contact@centizen.com

Centizen

A Leading Staffing, Custom Software and SaaS Product Development company founded in 2003. We offer a wide range of scalable, innovative IT Staffing and Software Development Solutions.

Call Us

India: +91 63807-80156

USA & Canada: +1 (971) 420-1700

Send Us Email

contact@centizen.com

Centizen

A Leading Staffing, Custom Software and SaaS Product Development company founded in 2003. We offer a wide range of scalable, innovative IT Staffing and Software Development Solutions.

Call Us

India: +91 63807-80156

USA & Canada: +1 (971) 420-1700

Send Us Email

contact@centizen.com